Illinois lawyer Mathew Kerbis markets himself as the Subscription Attorney, charging businesses and individual clients a monthly rate for legal advice and offering additional services like contract review and legal document drafting for a flat fee.

Kerbis is a fairly tech-savvy lawyer—he’s a regular at the American Bar Association’s ABA Techshow conference, he hosts a podcast about subscription-based billing and other industry innovations, and he uses a Stripe-integrated web portal to streamline client payments. So it’s not surprising that he’s spent time experimenting with AI tools to help him do legal research, draft documents, and otherwise assist clients more efficiently.

“The faster I can get something to a client, if you think about it in terms of time equals money, the more money I make,” he says. “But also, the more valuable it is to the clients to actually get things faster.”

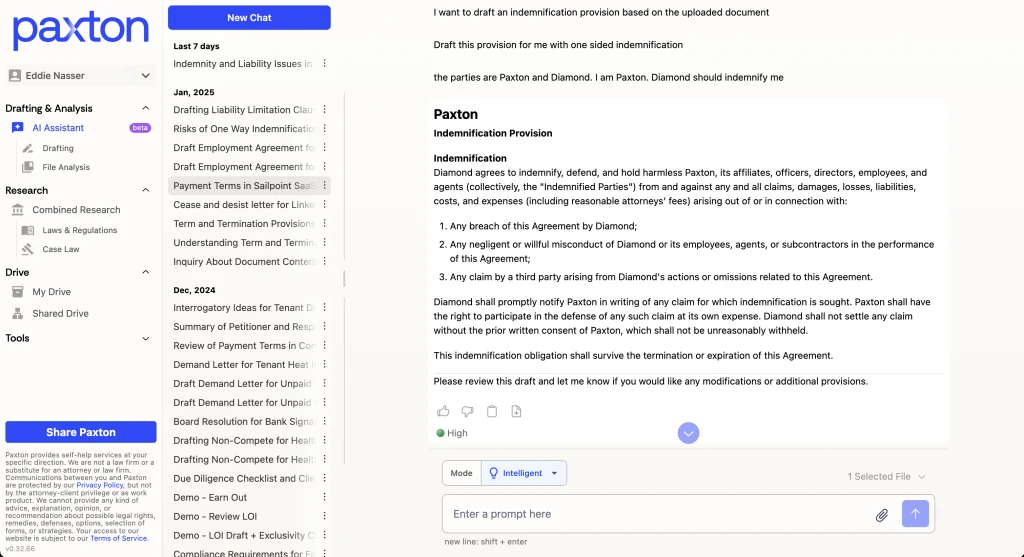

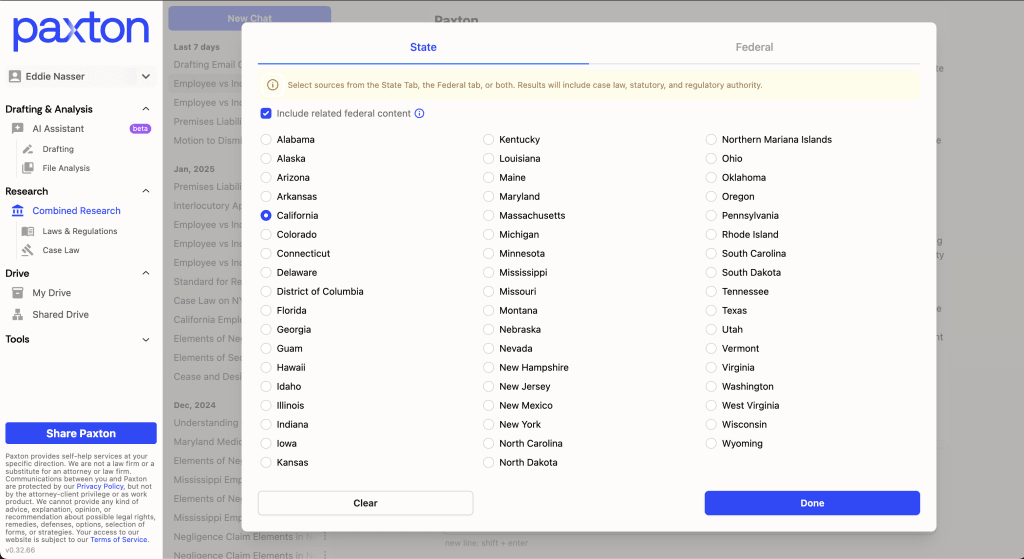

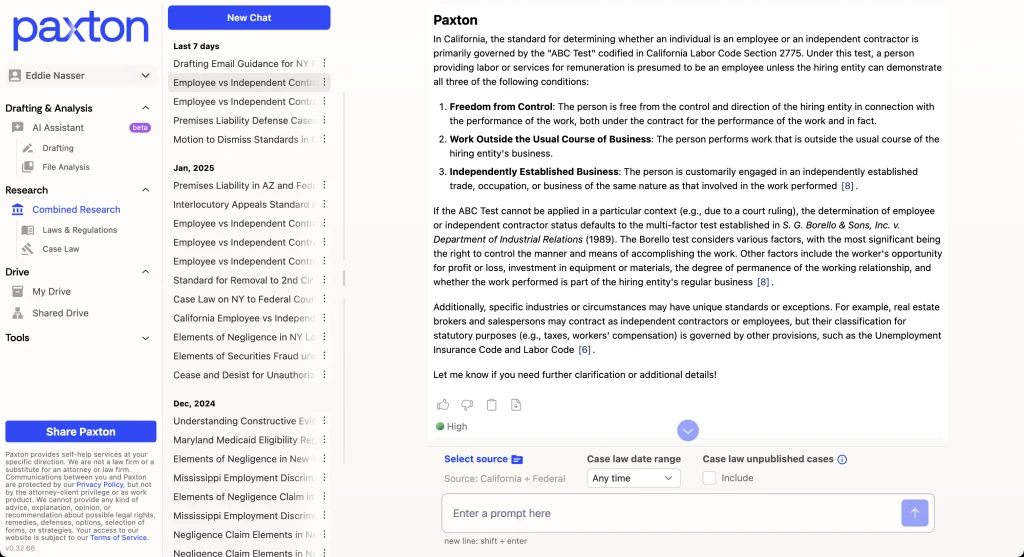

Today, Kerbis is a customer of Paxton AI, a legal AI provider that boasts it can help lawyers quickly draft legal documents, analyze everything from contracts to court filings, and conduct research on legal questions based on up-to-date laws and court precedent. He says Paxton can help tweak model contracts for a client’s situation, find relevant sections of law for a particular legal issue, and review proposed agreements for potentially troublesome terms.

Doing the work of a young attorney—at superhuman speed

“If a client books a call with me and gives me a contract right there on the phone with them, we could start identifying problematic issues before I’ve even put human eyes on the contract,” Kerbis says.

Paxton, a startup that just announced a $22 million Series A funding round, is one of a number of companies offering AI-powered assistance to lawyers. Its competitors range from established legal data vendors like LexisNexis and Thomson Reuters to other startups, all looking to use the text-processing power of large language model AI to more speedily parse, analyze, and draft the voluminous and precise documents that are inherent to the practice of law—and part of the reason for lawyers’ notoriously long hours.

“The idea is to do the work of, say, a young assistant or even a young attorney at a firm, but to do it at superhuman speed,” says Jake Heller, head of product for CoCounsel, a legal AI tool from Thomson Reuters. “If you talk to lawyers, I think there’s a universal feeling that there aren’t enough hours in the day to do all the things they want to do for the companies they work for, their clients, their law firms.”

A 2024 study by legal tech provider Clio found that 79% of legal professionals already use artificial intelligence tools to some degree. Roughly 25% “have adopted AI widely or universally,” according to the survey. Clio CTO Jonathan Watson says Clio Duo AI is the fastest-growing product in the company’s history; it helps lawyers answer questions about particular documents, schedule meetings, and even analyze data from the company’s law firm management software—all of which allows them to focus more on legal work and less on rote tasks.

“What we ultimately want to do is free them of that burden, so they can get back to doing what they do best, and that’s practicing law,” Watson says.

Putting AI through law school

And while lawyers’ misuse of AI has sometimes generated headlines when they’ve submitted court filings with hallucinated quotes and citations, legal AI providers generally say they’ve built their software with guardrails to reduce such errors—as well as protections for attorney-client confidentiality—and linked their language models to specialized legal knowledge bases.

“In order to be a proper legal assistant, you need legal training,” says Paxton AI cofounder and CEO Tanguy Chau. “And what that means to us is a complete understanding of all the laws, rules, regulations, statutes that govern the legal practice.”

Paxton AI has used a large language model to help analyze court decisions and document how they reference each other in a network graph structure, says cofounder and CTO Michael Ulin. “We have the LLM make a determination as to whether it’s been upheld or overturned,” he says, something that was historically done by human attorneys updating legal reference books and databases.

Those sorts of materials are, in fact, part of what helps power CoCounsel, with Heller pointing to a company history of publishing legal information and references dating back to the founding of lawbook giant West Publishing—now part of Thomson Reuters—in the 1800s.

“They’ve hired some of the best attorneys in the country and said, ‘Well, give us your commentary or thoughts on this topic,’” Heller says, with that material now accessible to the AI. “It’s able to also do what a lawyer would do, which is draw from these resources, read them first, get a deep understanding of a topic or field from the world’s best experts that really only we have, and then that informs its decision-making process.”

Lowering the risk of hallucination

Legal AI tools generally use the technique known as retrieval-augmented generation (RAG), in which search-engine-like processes first locate relevant source materials, then provide them to language models to use in providing a well-cited, on-point response with less risk of hallucination.

“Even several years ago, it was very clear that the retrieval—you know, the R element to the RAG—was the most important part of this whole process, being able to properly identify what cases are responsive. Not just cases, but legislation, regulation,” says Mark Doble, cofounder and CEO of Alexi, which offers legal AI with a focus on litigation. “And so we’ve done a ton of work in making sure that the retrieval component is really good.”

Alexi has also developed rigorous automated and manual systems to verify its AI consistently and statistically predictably gives accurate responses, Doble says. Similarly, CoCounsel is routinely quizzed on tens of thousands of test cases, Heller says, and Paxton has published to GitHub its AI’s results on two legal AI benchmarks, including one developed by scholars at Stanford and Yale as part of a study of legal hallucinations by LLMs, where Paxton claims a nearly 94% accuracy rate.

Still, even the most rigorously tested AI systems aren’t immune to making mistakes. RAG-powered systems can still hallucinate, particularly if the underlying retrieval process produces irrelevant or misleading hits, something familiar to anyone who has read the AI summaries that now top many internet search results.

“From the perspective of general AI research, we know that RAG can reduce the hallucination rate, but it is no silver bullet,” says Daniel E. Ho, a professor at Stanford Law School. Ho is one of the authors of the legal hallucination study and an additional paper looking specifically at errors made by legal-focused AI.

In one example, Ho and his colleagues found that a RAG-powered legal AI system asked to identify notable opinions written by “Judge Luther A. Wilgarten” (a fictitious jurist with the notable initials L.A.W.) pointed to a case called Luther v. Locke. It was presumably the result of a routine false-positive search result based on the made-up judge’s first name. While a human lawyer searching a decision database would have realized the mix-up and quickly skipped past that search result, the AI was apparently unable to note that the case was decided by a differently named judge.

“It would not surprise any lawyer who’s spent time trying to research cases that there are going to be false positives in those retrieved results,” Ho says. “And when those form the basis of the generated statement through RAG, that’s when hallucinations can result.”

Even so, Ho says, the tools can still be useful in legal research, drafting, and other tasks, provided they’re not treated as out-and-out replacements for human lawyers and, ideally, with AI developers providing information about how they’re built and how they perform.

“The lawyer is fully responsible for the work on behalf of the client”

Since lawyers are already used to picking apart documents, arguments, and citations, as well as double-checking the work of junior associates and paralegals, they may be as well equipped as any professional to use AI as a helpful tool—the first or last pair of eyes on a document, or a path to finding case law relevant to a particular question—rather than an omniscient oracle.

“This is something you’re asking to infer how to respond to you, and you need to look at that with a critical eye and go, does that make sense?” says Clio’s Watson.

As some attorneys using general-purpose AI tools have learned the hard way, groups like the American Bar Association have said relying on AI doesn’t absolve lawyers of their basic duties to competently represent their clients, safeguard their confidentiality, and ensure what they present in court is accurate and truthful. “In short, regardless of the level of review the lawyer selects, the lawyer is fully responsible for the work on behalf of the client,” according to a formal American Bar Association opinion from July.

Experts have suggested that lawyers who rely on mistaken AI-generated information could be sued by clients for malpractice. Even law-focused AI solutions often disclaim legal responsibility for errors, meaning lawyers are likely on the hook for any AI mistakes they fail to catch.

Kerbis says he uses Paxton AI to find on-point sections of the law, but he’ll still read the actual cited references. And when he asks the AI to find potential red flags in a contract, he’ll naturally evaluate them himself.

Other Paxton customers use the tool to look for the relevant “needle in a haystack” in bulky document repositories, like medical records involved in an injury suit, Ulin says. Since AI can work so quickly, and its findings can often be quickly verified by human attorneys, there may be little reason not to at least see what it can find. Kerbis says the AI sometimes flags a section of a contract that’s ultimately unobjectionable, like an unusually long contract term that actually benefits his client, but such findings are easy enough to skip past.

Since Kerbis generally charges clients flat fees instead of an hourly rate, it’s to his advantage if he can do good legal work faster. Some experts predict such arrangements will be more common if AI helps more lawyers work more quickly, though previous predictions of the end of the billable hour have proven premature.

Also yet to be determined is whether AI-forward lawyers and law firms will ultimately prefer to get AI tools through an existing vendor like Thomson Reuters or Clio, or from an AI-focused startup like Alexi or Paxton. And it remains to be seen whether the technology will really provide lawyers the level of productivity boost proponents and AI vendors hope for. If it does, it may well become as necessary to the modern practice of law as Microsoft Word.

Already, some of those paying the bills and waiting on legal advice have begun to urge lawyers to adopt AI, says Doble, adding, “It’s not clients getting angry when lawyers use AI tools. It’s clients getting angry when lawyers don’t use AI tools.”

Accedi per aggiungere un commento

Altri post in questo gruppo

Zipline’s cofounder and CEO Keller Cliffton charts the company’s recent expansion from transporting blood for lifesaving transfusions in Rwanda to retail deliveries across eight countries—includin

When Skype debuted in 2003, it was the first time I remember feeling that an individual app—and not just the broader internet—was radically disrupting communications.

Thanks to its imple

It’s spring, and nature is pulling me away from my computer as I write this. The sun is shining, the world is warming up, and the birds are chirping away.

And that got me thinking: What

Wake up, the running influencers are fighting again.

In the hot seat this week is popular running influencer Kate Mackz, who faces heavy backlash over the latest guest on her runni

Are you guilty of overusing the monkey covering its eyes emoji? Do you find it impossible to send a text without tacking on a laughing-crying face?

Much like choosing between a full stop

SAG-AFTRA is expanding its reach into the influencer economy.

In late April, the u