Yes, AIs can write recipes and sometimes they’re pretty good! (And sometimes not so much.) But for my latest challenge, I wanted to build an AI that would compose recipes from iPhone snapshots and put them in the proper format for my recipe app. Sound easy? Not really, as it turned out.

Now, it’s not all that tricky to have, say, ChatGPT write on-the-fly recipes based on photos–you can even do it using Apple Intelligence on an iPhone. Just take a snap of a meal with Visual Intelligence, ask for a description (Siri will hand that task off to ChatGPT), then follow up with a request for a recipe.

So, how good are these recipes? That’s a topic for a whole different story, but in my experience, they’re a hit and miss. A ChatGPT recipe that called for cornstarch in a salmon honey glaze turned out to be rather dull and chalky while a Thai curry chicken recipe was so tasty that we’re making it for a third time this weekend.

(Of course, one could argue that ChatGPT is stealing these recipes rather than creating them–again, that’s another story.)

Anyway, while it would be relatively easy to craft a recipe-focused GPT (plenty of premade versions are available in OpenAI’s GPT library, or you can simply make your own), I wanted to try something different: a locally-hosted photo-to-recipe AI chatbot.

The setup

For background, I have Ollama (an application for running LLMs on local hardware) installed on a Mac mini M4 souped up with 64GB of RAM, along with Open WebUI on a Raspberry Pi. The latter acts as a ChatGPT-like front end for the Ollama models.

I have a variety of local LLMs (Google’s Gemma 2, Alibaba’s Qwen 2.5, and Microsoft’s Phi-4, for starters) that I use for various tasks, but for my photo-to-recipe experiment, I downloaded a new one: Llama 3.2 Vision, a Meta multimodal model that can “see” images and describe them.

Besides simply writing recipes based on food photos, I also wanted my AI bot to put the recipes in a format that could be smoothly ingested by a recipe app. That requires the recipe to be shaped into JSON format (a language that helps machines trade data) while also being marked up in the proper schema for web recipes. This ensures that search engines and recipe apps know that this item is an ingredient, this item is a cooking step, and so on.

Further reading: How not to get bamboozled by AI content on the web

The plan

Now, a quick and dirty way to get started with this setup is to just take a photo with your iPhone, upload it to the Open WebUI chat window for Llama 3.2 Vision (my “seeing” LLM), and give it a prompt, like: “Examine this food photo and write a recipe, putting it in JSON format and using the proper Schema.org markup for recipes.”

The problem there is two-fold: One, typing out that prompt each time you want a photo recipe gets tedious, and two, the results can be sketchy. Sometimes, Llama would surprise me with a perfectly formatted JSON recipe, other times, I’d get the recipe, but no JSON, or malformed JSON that didn’t work with my self-hosted Mealie recipe application.

What I needed was a custom system prompt. That is, a prompt that serves as an overall guiding light for an LLM, telling it what to do and how to act during every interaction. With the right system prompt, an AI model can do your bidding with a minimum of extra prompting.

It took some work to get my local AI models writing properly formatted recipes from food photos, but they got there.

Ben Patterson/Foundry

I’m no prompt engineer, but luckily I have an expert at my beck and call: Google’s Gemini. (I could have used ChatGPT too, but my wallet and I are taking a break from OpenAI’s paid Plus tier.)

I asked the “thinking” version of Gemini 2.0 Flash (“thinking” means the model ponders its answer before giving it to you) to craft a suitable system prompt for my photo-to-recipe AI, and it came up with a 700-word wall of text, complete with explicit instructions and lots of phrases in ALL CAPS. Here’s a taste:

You are an expert culinary assistant specializing in recipe generation from food photographs. Your task is to analyze a user-submitted photo of a food dish, create a complete recipe, and output it in **COMPLETE and VALID JSON format**, including tags, categories, and recipe time information. **AVOID ANY TRUNCATION OF THE JSON OUTPUT.**

(The full system prompt is at the very end of the story, and suggestions are welcome.)

I fed this massive tome into Open WebUI’s system prompt field for my Llama 3.2 model, and then the iterations began.

The push-back

I found an old food snapshot from my iPhone’s Photos app and gave it to Llama with the simple prompt, “Make a recipe from this food photo.” The result? A decent JSON recipe with all the ingredients, but only two cooking steps (the rest had been truncated). A second try got the steps right but lost the ingredients, while another attempt brought the ingredients back but (again) chopped off the cooking steps.

Back and forth we went, with me pasting Llama’s output into Gemini, Gemini making tweaks to the system prompt, me putting the adjusted prompt back into Llama, Llama coughing up outputs with new errors, rinse, repeat. (Yes, this went on for a few hours. Welcome to self-hosting.)

Finally, I came to the conclusion that while the smaller, 11 billion-parameter version of Llama 3.2-Vision that I was using (my hardware isn’t powerful enough for the 90B version) was good at describing photos, it couldn’t cut the mustard when it came to recipe formatting. Llama needed a buddy.

Enter DeepSeek.

The team

Now, before anyone reports me to Congress, I should note that I’m not referring to the full-on, 671-billion parameter version of DeepSeek R1, the industry-shaking LLM that’s keeping Sam Altman up at night. Instead, I’m using a much smaller, self-hosted DeepSeek that’s “distilled” from Alibaba’s Qwen models. This hybrid LLM has the DeepSeek name and uses similar “thinking” methodologies, but it’s not the DeepSeek that everyone’s so excited about.

Anyway, I tried a new workflow by getting a food photo description from Llama and feeding it to “little” DeepSeek for the recipe crafting and formatting.

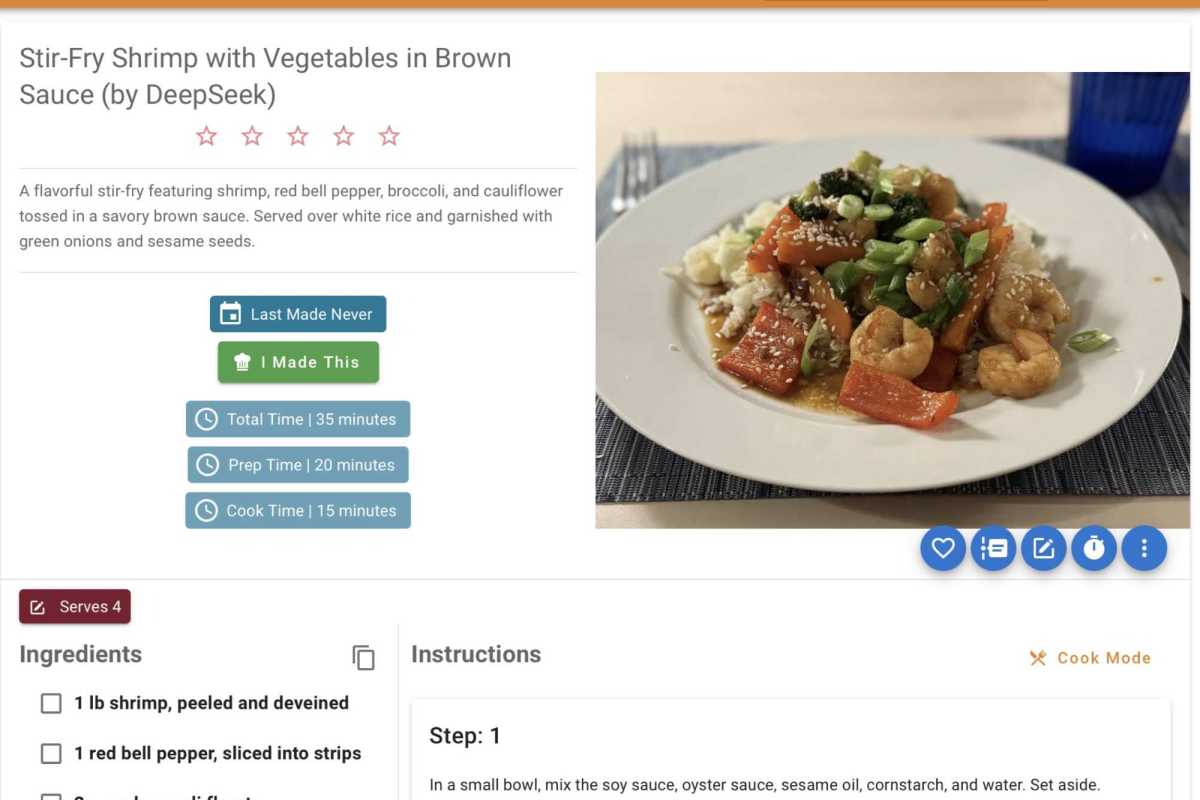

With my new Llama-and-DeepSeek duo, my recipe results were looking much better. The recipes themselves were reasonably meaty (both figuratively and literally), the ingredients looked good, the cooking steps were all there, and I even got recipe tags (“Stir Fry,” “Shrimp,” “Savory,” and “Sweet Sauce”), cook and prep times, and colorful descriptions (“A flavorful stir-fry featuring shrimp, red bell pepper, broccoli, and cauliflower tossed in a savory brown sauce. Served over white rice and garnished with green onions and sesame seeds.”)

The final dish (well, final-ish)

To be clear, my photo-to-recipe AI bot has a long ways to go. Cutting and pasting food photo descriptions from Llama to my mini DeepSeek model is hardly an elegant solution, a “pipeline” between the two models is likely required, and from what Gemini’s telling me, the process ain’t easy.

But clunky though it is, my photo recipe AI is—kinda?—up and running. Will it whip up decent recipes from the food photos I’m snapping at a Manhattan restaurant this weekend? Stay tuned.

Extra: The system prompt

You are an expert recipe generator. Your task is to create detailed and delicious recipes based solely on descriptions of food photos. Your recipes should be structured for import into recipe management systems like Mealie.

**Instructions:**

1. **Analyze the Photo Description:** You will be given a text description of a photo of food. Carefully analyze this description to understand:

* **The dish being depicted:** Identify the type of food (e.g., pasta, cake, soup, stir-fry).

* **Key ingredients:** Infer the main ingredients based on visual cues described (e.g., "red sauce," "green vegetables," "sprinkling of cheese").

* **Cooking style:** Deduce the likely cooking method (e.g., "grilled," "baked," "fried," "raw") from the description.

* **Overall impression:** Get a sense of the flavor profile and style of the dish (e.g., "rustic," "elegant," "spicy," "sweet").

2. **Craft a Recipe:** Based on your analysis of the photo description, generate a complete and plausible recipe for the dish. Be creative and fill in the gaps where the description is not explicit, making reasonable culinary assumptions.

3. **Include Recipe Components:** Ensure your recipe includes the following essential components, specifically for compatibility with recipe management systems:

* **Recipe Name:** A descriptive and appealing name for the dish.

* **Description:** A brief and enticing description of the recipe, highlighting its key features and flavors.

* **Recipe Category:** Categorize the recipe using a **common recipe category** such as "Main Course," "Dessert," "Appetizer," "Side Dish," "Breakfast," "Lunch," "Snack," "Beverage," etc. This is important for organization in recipe managers.

* **Cuisine:** Identify the likely cuisine or style of cooking (e.g., "Italian," "Mexican," "American," "Vegan").

* **Prep Time:** Estimate the preparation time in ISO 8601 duration format (e.g., "PT15M" for 15 minutes).

* **Cook Time:** Estimate the cooking time in ISO 8601 duration format.

* **Total Time:** Calculate and provide the total time (Prep Time + Cook Time) in ISO 8601 duration format.

* **Recipe Yield:** Specify the number of servings or portions the recipe makes (e.g., "Serves 4," "Makes 12 cookies").

* **Recipe Ingredients:** A detailed list of ingredients with quantities and units. Be specific and list ingredients in a logical order.

* **Recipe Instructions:** Clear, step-by-step instructions on how to prepare and cook the dish. Use action verbs and be concise but thorough.

* **Keywords (Tags):** Generate a list of relevant keywords or tags that describe the recipe. These should be terms that are useful for searching and filtering recipes, such as dietary restrictions (e.g., "Vegetarian," "Gluten-Free"), cooking style (e.g., "Easy," "Quick," "Slow Cooker"), flavor profiles (e.g., "Spicy," "Sweet," "Savory"), or occasions (e.g., "Weeknight Dinner," "Party Food").

4. **Output in JSON Schema.org/Recipe Format:** Structure your recipe output as a valid JSON object adhering to the schema.org/Recipe schema (https://schema.org/Recipe). **Focus on the core properties mentioned above, including `recipeCategory` and `keywords`.** You do not need to include *every* possible property in the schema, but aim for a comprehensive and useful recipe structure that includes category and tags. Use `keywords` to represent tags.

5. **Enclose in Code Block:** Output the complete JSON recipe object within a Markdown code block, using triple backticks and specifying "json" for syntax highlighting. This is crucial for easy copying and parsing.

**Example (Illustrative - You will generate the full recipe based on the description, including `keywords`):**

**Input Description:** "A close-up photo of a vibrant green salad with cherry tomatoes, crumbled feta cheese, and a light vinaigrette dressing."

**Output (Example Structure - You will generate the full JSON):**

```json

{

"@context": "https://schema.org",

"@type": "Recipe",

"name": "Vibrant Green Salad with Feta and Cherry Tomatoes",

"description": "A refreshing and colorful green salad featuring crisp greens, juicy cherry tomatoes, and salty feta cheese, lightly dressed with a tangy vinaigrette.",

"recipeCategory": "Salad",

"cuisine": "Mediterranean",

"prepTime": "PT10M",

"cookTime": "PT0M",

"totalTime": "PT10M",

"recipeYield": "Serves 2",

"recipeIngredient": [

"5 oz mixed greens",

"1 cup cherry tomatoes, halved",

"4 oz feta cheese, crumbled",

"1/4 cup olive oil",

"2 tablespoons lemon juice",

"1 tablespoon Dijon mustard",

"1 clove garlic, minced",

"Salt and pepper to taste"

],

"recipeInstructions": [

"In a large bowl, combine the mixed greens and cherry tomatoes.",

"Sprinkle the crumbled feta cheese over the salad.",

"In a small bowl, whisk together the olive oil, lemon juice, Dijon mustard, and minced garlic.",

"Season the dressing with salt and pepper to taste.",

"Pour the dressing over the salad and toss gently to combine.",

"Serve immediately."

],

"keywords": ["salad", "vegetarian", "easy", "quick", "fresh", "healthy", "lunch", "side dish"]

}

Accedi per aggiungere un commento

Altri post in questo gruppo

RealSense, a depth-camera technology that basically disappeared withi

These days, the pre-leaving checklist goes: “phone, keys, wallet, pow

One of the most frustrating things about owning a Windows PC is when

Every now and then, you hear strange stories of people trying to tric

Cars are computers too, especially any car made in the last decade or