Thanks for reading Plugged In, Fast Company’s weekly tech newsletter. If a friend or colleague forwarded this edition to you—or you’re reading it on FastCompany.com—you can check out previous issues and sign up to get it yourself every Wednesday morning. I look forward to receiving your feedback and ideas: Send them to me at hmccracken@fastcompany.com.

Before we proceed, here are some Fast Company tech stories you may not have read yet:

- The end of passwords? Amazon becomes the latest to adopt biometric alternatives

- Qualcomm’s Snapdragon X Elite chip is a big bet on the PC’s future

- Of course AI is coming for PowerPoint now

- Senator Mark Warner says Congress is already losing the plot on AI regulation

It’s a wonder I was able to finish this newsletter—or, really, get any work done at all over the past few days. You see, a vast percentage of my attention has been commandeered by DALL-E 3, the new update to OpenAI’s AI-infused image generator.

If you’d like an overview of what DALL-E 3 does and how it does it, you can’t do better than my colleague Mark Wilson’s story, for which he spoke with its creator, OpenAI’s Aditya Ramesh. In short, the service is built into ChatGPT Plus, letting those of us who pay for OpenAI’s chatbot create images in the same conversational manner we do when making purely textual requests. It also crafts its own detailed prompts based on your original prompt, which results in fully fleshed out visuals even if you haven’t asked for anything terribly specific. And it’s just radically better at rendering complex scenes that pretty much make sense, with fewer of the bizarre glitches that were the norm with DALL-E 2.

Ramesh told Mark that the goal was to make DALL-E 3 simple enough for someone like “a casual user who just wants to generate images to fit into a PowerPoint.” Not having any practical uses for the tool at the moment, I’ve been using it to entertain myself—and boy, is it a success at that.

At its best, DALL-E 3 is eerily proficient at mimicking commercial art and pop-culture aesthetics of the past. It does wonderful fantasy magazine ads from the decade of your choice. It can make up toys that would go for big bucks if they somehow magically showed up at a flea market in the real world. And it produces comics whose dada vibe makes them feel like imports from Bizarro World.

Once I’ve entered a prompt, DALL-E 3 usually takes about 40 seconds to present four images based on it. It’s a period of anticipation that’s rare in the instant-gratification world of personal technology. I never know what I’m going to get, and I swear that makes the whole experience hypnotically addictive in a way it might not be if the results were immediate and predictable.

Sometimes, what DALL-E does with my vague ideas sails way past my expectations. Other times, it’s off its game or chugs away for a bit before informing me that I’ve hit a rate limit and must try again later. It’s equally capable of chiding me for requests involving copyrighted material and inserting depictions of characters such as Donald Duck when I haven’t asked for them. And it often tantalizingly tells me it’s rejected one or more of its own prompts derived from my requests for violating its content policy. (Then again, I’m sometimes startled by the imagery it’s willing to tackle, such as pictures of cute cartoon children tending bar and operating casinos.)

There’s an improvisational, “yes, and” flavor to playing with DALL-E 3 that goes beyond what I’ve encountered with other AI tools. It thrives on seizing my spitballed material, running with it, and seeing where it leads. I can ask it for 1940s posters of animals engaging in household tasks, then let it come up with the precise scenarios: a tabby cat making pancakes, a parrot answering the phone, a raccoon typing, and (this is as off-color as I’ve seen it get) a dog reading a newspaper while sitting on the toilet. That’s way more fun than painstakingly trying to get it to create a picture I already have in my head, which is the norm with DALL-E 2 and other image generators I’ve tried.

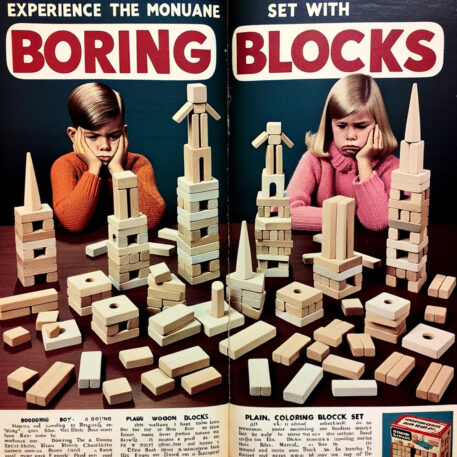

When ChatGPT was new, many people tried to get it to make up jokes that were genuinely funny, and usually failed. But DALL-E 3 has made me laugh out loud more than once, such as when it came up with a vintage ad for “Boring Blocks” showing kids sulking at the construction toy’s sheer tediousness. Does the algorithm comprehend the concept of humor? Or does the essential truth that it’s ultimately a fancy piece of math that doesn’t understand what it’s doing sometimes result in happy accidents? If the end result amuses me, does it matter?

I haven’t spent too much time wrestling with such questions yet. But here’s something I’ve found striking: Even when a DALL-E 3 image delights me, I’ve learned that sharing it doesn’t necessarily spread joy. Some friends instinctively wince at AI-generated content of any sort, like they’d react to a robot scratching its fingernails across a blackboard. Others refuse to give it a chance on the grounds that training large language models on the work of unwitting human artists amounts to a massive intellectual property heist, regardless of whether the results resemble any existing item in particular.

The fact that DALL-E 3 bothers people I respect bothers me. And I do worry about it getting so good that the pseudo-photographs and imaginary artifacts it creates might look convincingly real to someone who’s not paying attention. Any benefits it and similar products might offer humanity could be greatly outweighed by their potential to be used for deception.

Benj Edwards confronted the scary implications of undetectable visual fakery three years ago in a remarkable Fast Company article. When I edited it, I had no idea how prescient it would turn out to be. But here we are, and it’s not the least bit premature to fear such technology’s imminent arrival.

So it’s dawned on me that one of the things I like about DALL-E 3 is that in most cases, it remains obvious that you’re looking at something synthesized by a computer. Its “photos” usually look more like photo illustrations. Rendering mishaps such as mangled hands are greatly reduced compared to DALL-E 2, but they’re still there. And while the new version is much more adept at stringing together letters into actual words than its predecessor, it still speaks a language I think of as DALL-ese. That involves everything from randomly repeated letters to out-and-out gibberish. (Sample dialogue from a comic I had it generate: “Inflead to the map beach tave toys!”)

These imperfections aren’t just entertaining in their own right; they also amount to a tough-to-remove watermark identifying an image’s AI origins. I think of them as a feature, not a bug. And if DALL-E 4, 5, or 6 is another great leap forward toward computers getting so adept at expressing themselves visually that they rival human ability, I reserve the right to look back nostalgically at DALL-E 3 as the technology’s high point.

Ak chcete pridať komentár, prihláste sa

Ostatné príspevky v tejto skupine

Lindsay Orr was active and healthy, running marathons and hiking all around Colorado. During pregnancy, she developed a persistent headache and dangerously high blood pressure—hallmark symptoms of

President Donald Trump, who’s buying a T

Bluesky CEO Jay Graber took a clear swipe at Meta CEO Mark Zuckerberg when she took the stage at the South by Southwest (SXSW) conference in Austin this week wearing a shirt that copied an infamou

The Apprentice, the long-running reality TV show th

Google has made it much easier to find the answers we seek without navigating to various websites, but that has made it much harder to do business for media companies and other creators. And this